The amount of data and information around us is continuously growing with each passing second. The estimates indicate that the total volume of information generated every year would reach the order of zeta bytes! (1 zeta byte = 1021 bytes, yes 21 zeroes!). Such a gigantic increase in the volume of data is happening due to multiple reasons: First, the increasing digitisation of our surroundings and our very own existence (from biometrics as identity we are fast moving towards virtual worlds and metaverses!). Secondly, domains such as defence, security and climate change require continuous 24x7x365 streams of data to be acquired, analysed, and monitored for extracting useful operational insights. Thirdly, consistent miniaturisation and advances in the domain of electronics (semiconductors) over the last few decades have made the procedures of data generation, acquisition and storage cheaper than ever before.

This humongous amount of raw data by itself is of little value unless meaningful insights and patterns are not extracted in an efficient and time-bound manner. On this front, we are witnessing the explosion of emerging computational techniques such as Artificial Intelligence, Machine Learning, and Deep-Learning. While advanced AI techniques such as deep learning have proved to be useful for applications, including computer vision and NLP, they come with some inherent trade-offs: First, requirement of a huge amount of human supervision for training (ex – datasets with 18 million annotated images and 11,000 categories).[1] And second, unsustainable energy dissipation during the development phase – (According to one study,[2] training of the powerful language model GPT-3 led to 552 tonnes of carbon dioxide emission. Such emission is equivalent to that of 120 cars driven over an entire year).

Finding clues in human brain

To overcome some of the aforementioned limitations, researchers have turned towards mother nature, finding clues in the way human (or mammal) brains function and process information. The human brain is a computational marvel! It is estimated to consume ~ 20 watts of power to perform extremely advanced intelligent actions that no supercomputer in the world can beat even after dissipating gigawatts of power. The latest generation of energy-efficient neural networks inspired by the working of human brains is known as spiking neural networks (SNNs). The field of science and engineering that deals with the design and development of SNNs is known as Neuromorphic Computing. Neuromorphic computing is a highly interdisciplinary topic lying at the intersection of – computer science, computer architecture, computational neuroscience, semiconductors and Artificial Intelligence.

In the brain, neurons are the cells that are responsible for processing and transmitting information, generally communicating through the language of spikes. The connection between two neurons is called a synapse. The flexibility of the synapses as a conducting medium for inter-neuron communication is a critical underlying factor enabling the extreme learning capabilities of the brain. It is in fact the strength of synapses that defines the form and structure of a neural network in nature. In the domain of neuromorphic computing, hardware designers and computer scientists take broad inspiration from the findings of computational neuroscience, and observations of neurobiologists and try to build artificial brains in silicon. While biological brains are extremely complex and not fully understood, neuromorphic designs or artificial brains are at best highly simplified efficient computational systems for specific applications.

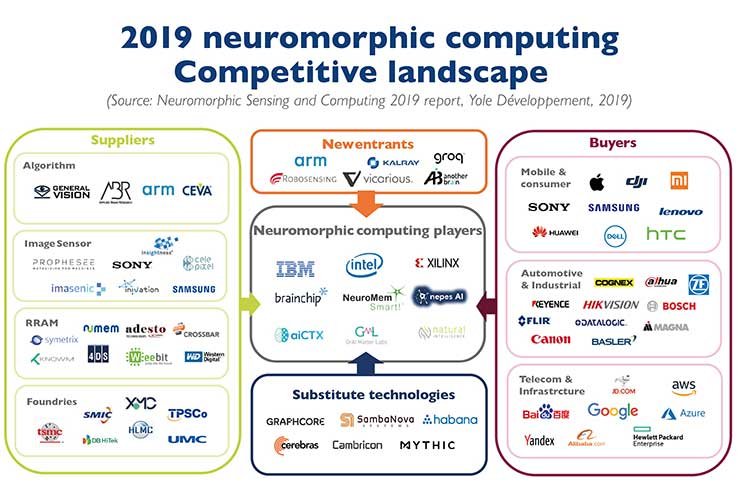

The neuromorphic computing market is expected to reach $ 550 million by 2026, with a CAGR of 89.1%[3]. Major defence R&D and aerospace bodies have been actively investing towards the goal of building cutting-edge neuromorphic computing hardware/software and sensors. Some notable mentions include – DARPA’s $ 100 million grant for the SYNAPSE project in 2008, with the aim to build a brain-scale neuromorphic processor[4]. The US Air Force Research Laboratory (AFRL), in partnership with IBM, claimed to build the world’s largest neuromorphic processor Blue Raven to explore various defence/aerospace applications[5]. DARPA’s 2021 initiative to support neuromorphic learning algorithms for event-based infrared (IR) sensors for defence applications[6]. Australian government’s Moon to Mars initiative supported applications of neuromorphic vision sensors for complex in-orbit processes such as spacecraft docking, refuelling and payload transfer or replacement[7].

Neuromorphic computing chips

The dual-use potential of neuromorphic computing can be gauged by the fact that several major tech-industry players/governments have directly or indirectly invested in the domain. Companies such as IBM, Intel and Brainchip have developed low-power neuromorphic computing chips that can solve computational problems at a fraction of the energy compared to mainstream processors. Several companies and startups are also developing bio-inspired neuromorphic sensors (ex – Prophesee). Neuromorphic vision sensors have been shown to have excellent properties (ex- speed, bandwidth, dynamic range) where they easily surpass conventional CMOS vision sensors. Beyond vision applications, neuromorphic computing has been successfully applied to multiple diverse fields such as artificial smell or ‘olfaction’, artificial touch/skin, robotics, high-speed drones, cyber-security, navigation, optimisation, and speech-processing.

India’s significant contribution

Although at a modest scale, universities, startups and industries in India are beginning to make a significant contribution towards cutting-edge R&D in the domain of neuromorphic computing and its applications. Some active Indian neuromorphic R&D groups include the likes of IIT-Bombay[11], IIT-Delhi[8,10], IISc [13] and TCS-Research [12] to name a few. Our IIT-D research group has been working in the domain for the last several years. In recent works, we have demonstrated ultra high speed, high dynamic range vision processing using neuromorphic event sensors[8] and state-of-the-art nanoelectronic neuromorphic hardware for low-power speech processing[10]. These results can be extended to the domains of ISR, data-fusion and high-speed tracking applications under complex resource-constrained environments.

Taking timely cognizance of the subject, DRDO recently introduced a challenge related to neuromorphic sensing in its flagship innovation contest “Dare to Dream 2.0”, which had successful indigenous solutions developed by Indian universities and Startups[9]. The next five years are certainly very exciting to watch out for new neuromorphic capabilities and products across multiple application domains.

References

- Wu, Baoyuan, Weidong Chen, Yanbo Fan, Yong Zhang, Jinlong Hou, Jie Liu, and Tong Zhang. “Tencent ml-images: A large-scale multi-label image database for visual representation learning.” IEEE Access 7 (2019): 172683-172693.

- https://fortune.com/2021/04/21/ai-carbon-footprint-reduce-environmental-impact-of-tech-google-research-study/#:~:text=For%20instance%2C%20GPT%2D3%2C,passenger%20cars%20for%20a%20year.

- https://www.businesswire.com/news/home/20210603005505/en/Global-Neuromorphic-Computing-Market-Report-2021-Image-Recognition-Signal-Recognition-Data-Mining—Forecast-to-2026—ResearchAndMarkets.com

- http://www.indiandefencereview.com/neuromorphic-chips-defence-applications/

- https://www.afmc.af.mil/News/Article-Display/Article/1582460/afrl-ibm-unveil-worlds-largest-neuromorphic-digital-synaptic-super-computer/

- https://www.darpa.mil/news-events/2021-07-02

- https://www.defenceconnect.com.au/key-enablers/8403-thales-australia-and-western-sydney-university-win-space-research-funding?fbclid=IwAR10tWRKSKTe_ye943mAZEiSj7KFtEvZiQdz-XA-XXaa1NBTdDYZEmgp-gg

- Medya, R., Bezugam, S. S., Bane, D., & Suri, M. (2021, July). 2D Histogram based Region Proposal (H2RP) Algorithm for Neuromorphic Vision Sensors. In International Conference on Neuromorphic Systems 2021 (pp. 1-6).

- https://tdf.drdo.gov.in/sites/default/files/2021-10/Handbook_DaretoDream_compressed.pdf

- Shaban, A., Bezugam, S.S. & Suri, M. An adaptive threshold neuron for recurrent spiking neural networks with nanodevice hardware implementation. Nat Commun 12, 4234 (2021). https://doi.org/10.1038/s41467-021-24427-8

- Saraswat, V., & Ganguly, U. (2021). Stochasticity invariance control in Pr1-xCaxMnO3 RRAM to enable large-scale stochastic recurrent neural networks. Neuromorphic Computing and Engineering.

- George, A. M., Banerjee, D., Dey, S., Mukherjee, A., & Balamurali, P. (2020, July). A reservoir-based convolutional spiking neural network for gesture recognition from dvs input. In 2020 International Joint Conference on Neural Networks (IJCNN) (pp. 1-9). IEEE.

- Annamalai, L., Chakraborty, A., & Thakur, C. S. (2021). EvAn: Neuromorphic Event-Based Sparse Anomaly Detection. Frontiers in Neuroscience, 15, 853.

–The writer is a globally recognized deep tech innovator and Professor at IIT-Delhi. He is the founder of IIT-Delhi Start-up CYRAN AI Solutions. CYRAN builds edge-AI solutions for real-world applications. The witer was recognized by MIT Technology Review, USA as one of the top 35 innovators under the age of 35. His work has been recognized by IEEE, INAE, NASI, IEI, IASc. He has filed several patents and authored 90+ publications. Details on the R&D / innovations can be found at: https://web.iitd.ac.in/~manansuri/. The views expressed are personal and do not necessarily reflect the views of Raksha Anirveda